🎉 Meerschaum v2.3 is here!

It's finally here ― Meerschaum v2.3 is live on PyPI! In fact, at the time of writing, v2.3.6 is the latest version. Check out the release notes, and below are the highlights:

Action Chaining

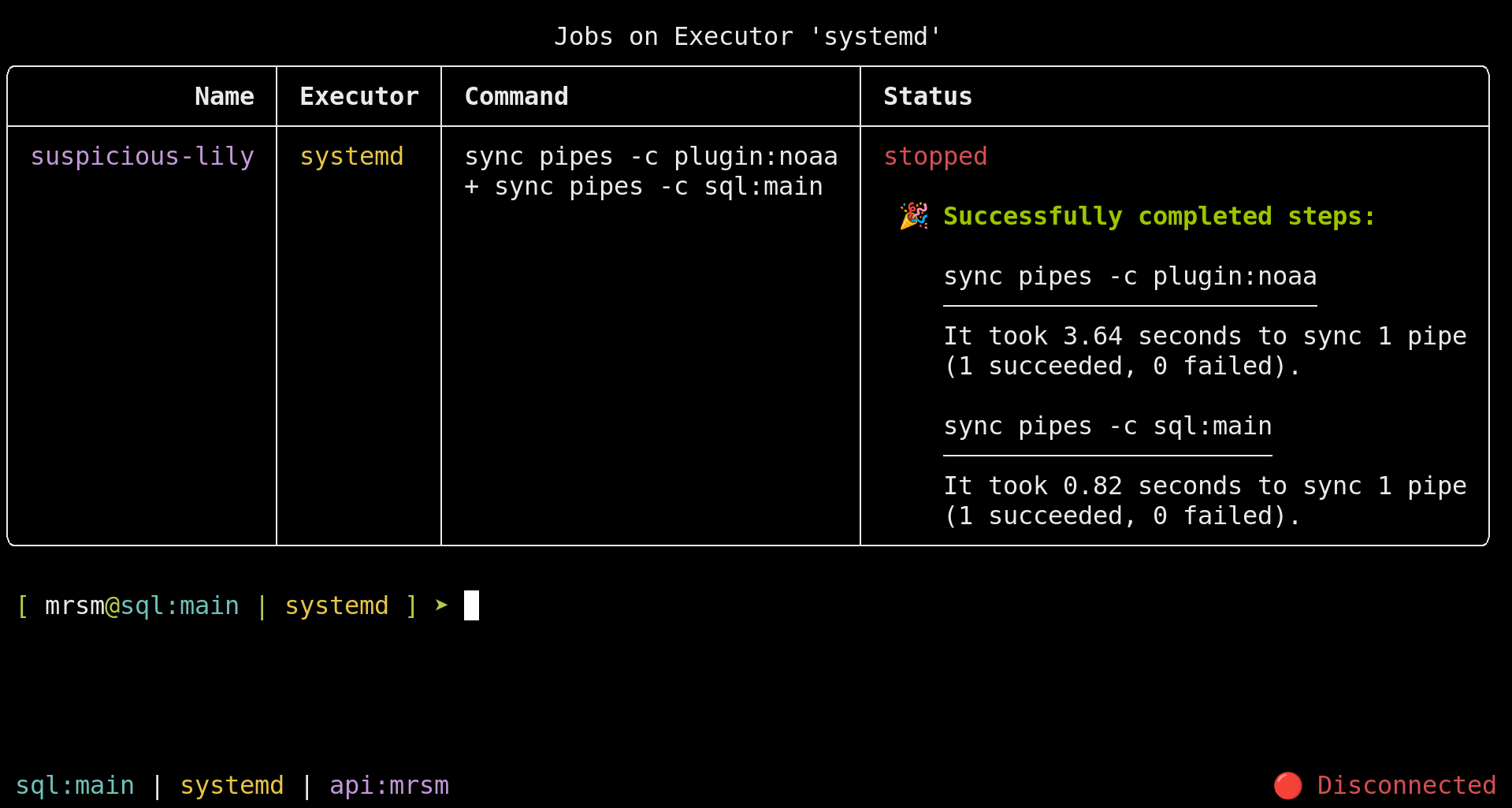

Actions may now be chained together with +, (similar to && in bash):

mrsm show pipes + sync pipesAdd -d to chained actions to run them as a job:

mrsm sync pipes -c plugin:noaa + \

sync pipes -c sql:main -dPipelines

Add : to run the chained arguments as a pipeline. Flags after : apply to the entire pipeline:

sing song + show arguments : --loop --min-seconds 30

sync pipes + verify pipes : -s 'daily' -d

show version : x3Pipelines allow scheduling of chained actions.

Here are some useful flags to add to your pipelines:

x3/3

Run the pipeline a certain number of times (e.g.x2or2will run twice).--loop

Continuously run the pipeline.--min-seconds

How many seconds to sleep between pipeline runs. Defaults to 1.-s/--schedule/--cron

Run the pipeline on a schedule, e.g.daily,every 3 hours, etc.-d/--daemon

Create a background job from the pipeline.

Jobs

Meerschaum jobs were overhauled to replace meerschaum.utils.daemon.Daemon, with the most notable feature being executors:

Executors

Jobs may be run locally or remotely. Local jobs have the executor systemd (if available) or local, and the executor for remote jobs is the connector keys for an API instance:

| Executor | Description |

|---|---|

systemd |

systemd user service, prefixed with mrsm-. |

local |

Unix daemon, restarted by the API server. |

api:{label} |

Local job on an API instance. |

Add the flag -e (--executor-keys) to a command to specify the executor. In the Meerschaum shell, you can run the command executor to set a session default (like instance).

sync pipes -e api:remote -dExecutors let you seamlessly manage API instances.

Python API

Manage your jobs with mrsm.Job:

import meerschaum as mrsm

job = mrsm.Job('syncing-engine', 'sync pipes')

job.start()

job.monitor_logs()

success, msg = job.resultProgrammatically manage background jobs.

Remote Actions

You can run actions remotely by adding -e api:{label} to your commands (run executor from the mrsm shell). The action is run as a temporary remote job on the API instance, and the output is streamed back to you over a websocket.

For example, your production API instance api:prod may have connectors unavailable to your client. Add -e to your commands to "remote into" the environment:

# Execute `sync pipes` on `api:prod`.

sync pipes -e api:prod

# Create the job 'weather' on `api:prod`.

sync pipes -m weather --name weather -e api:prod -dJob management actions are not executed this way but rather use API endpoints directly.

show logs -e api:prodWhen remotely running a pipeline of chained arguments, the entire pipeline is run on the API instance:

show pipes + sync pipes : x3 -e api:mainConclusion

All of these features complement each other nicely, further tightening the cohesiveness of the Meerschaum ecosystem. Combining them lets you craft some incredibly powerful commands, giving you yet more tools to wrangle your data, making this the most robust Meerschaum release to date!